ChatGPT Clone With Ollama & Gradio

In this blog, I'll guide you through leveraging Ollama to create a fully local and open-source iteration of ChatGPT from the ground up. Furthermore, Ollama enables running multiple models concurrently, offering a plethora of opportunities to explore.

If you are new to Ollama, check the following blogs first to set your basic clear with Ollama

Alright, let's get started. We will use the llama2 model for this blog, first of all, check and verify if our llama2 model is running locally

$ ollama list

NAME ID SIZE MODIFIED

gemma:latest 430ed3535049 5.2 GB 2 hours ago

hogwards-model:latest 4484e4280376 3.8 GB 3 weeks ago

llama2:latest 78e26419b446 3.8 GB 3 weeks ago

superman:latest eab3b004339f 5.2 GB 2 hours ago$ ollama run llama2Let's call our chat application Superchat

Create a superchat folder and create app.py in it.

import requests

import json

url = "http://localhost:11434/api/generate"

headers = {

'Content-Type': 'application/json'

}

data = {

"model": "llama2",

"stream": False,

"prompt": 'Why earth is revolves?'

}

response = requests.post(url, headers=headers, data=json.dumps(data))

if response.status_code == 200:

response_txt = response.text

data = json.loads(response_txt)

actual_response = data["response"]

print(actual_response)

else:

print("Error:", response.status_code, response.text)

Let's run app.py

$ python app.py

The Earth revolves around the sun due to a combination of gravitational forces and the conservation of angular momentum.

Gravitational forces: The Earth is attracted to the sun by gravity, which pulls it inward. The strength of this attraction depends on the mass of the Earth and the distance between it and the sun. As the Earth orbits the sun, it follows an elliptical path due to the balance between this gravitational force and the centrifugal force (which arises from the Earth's rotation).Alright, our API is working

So next, we will use Gradio to build frontend

$ pip install gradioModify your program to use Gradio Interface, we will wrap our API call to a method that will accept prompts as input and output will be a model response

import requests

import json

import gradio as gr

url = "http://localhost:11434/api/generate"

headers = {

'Content-Type': 'application/json'

}

def generate_response(prompt):

data = {

"model": "llama2",

"stream": False,

"prompt": prompt

}

response = requests.post(url, headers=headers, data=json.dumps(data))

if response.status_code == 200:

response_txt = response.text

data = json.loads(response_txt)

actual_response = data["response"]

return actual_response

else:

print("Error:", response.status_code, response.text)

iface = gr.Interface(

fn=generate_response,

inputs=["text"],

outputs=["text"]

)

if __name__ == "__main__":

iface.launch()

Run the program

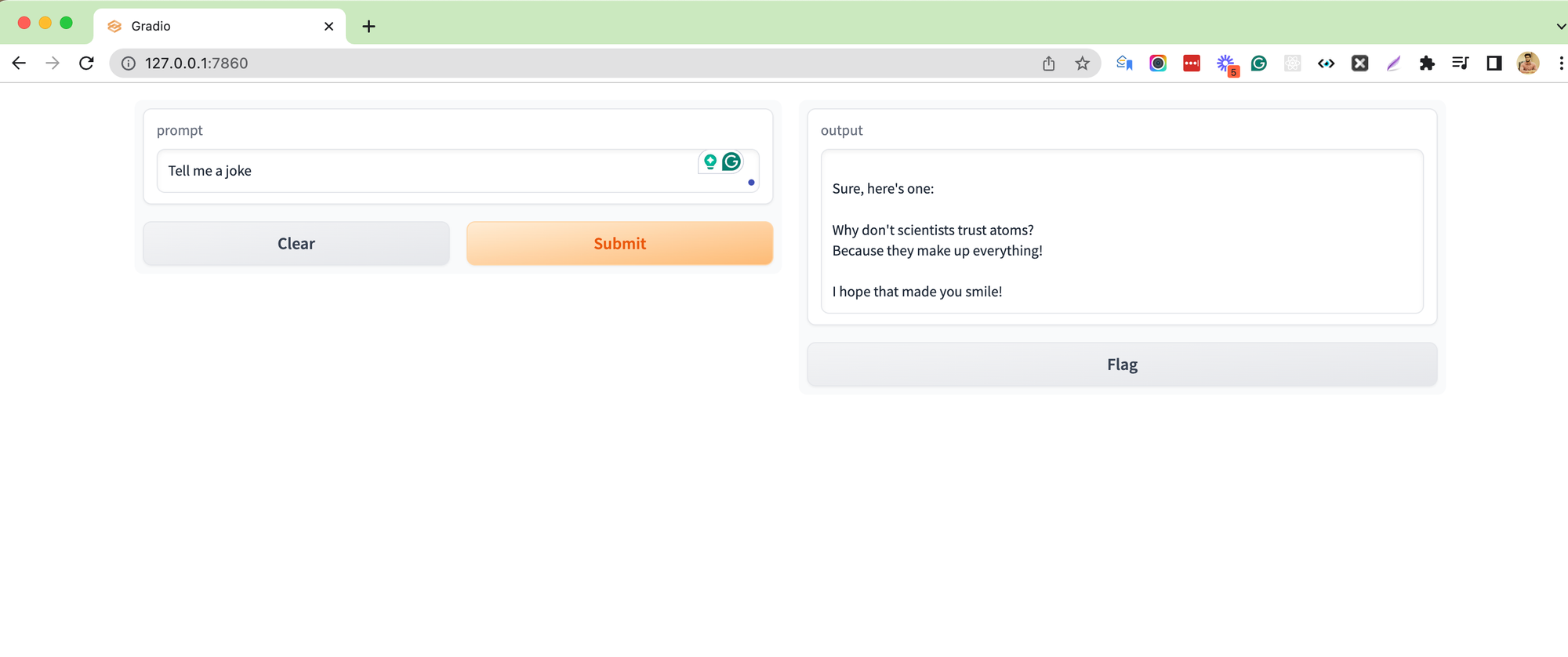

$ python app.py

Here we go!! Our local chatbot is working, you can modify this program further to remember history

But there is a problem, if we ask for therefore the model to tell us our last message it will not be able to remember it so let's implement chat history

import requests

import json

import gradio as gr

url = "http://localhost:11434/api/generate"

headers = {

'Content-Type': 'application/json'

}

conversation_history = []

def generate_response(prompt):

conversation_history.append(prompt)

full_prompt = "\n".join(conversation_history)

data = {

"model": "llama2",

"stream": False,

"prompt": full_prompt

}

response = requests.post(url, headers=headers, data=json.dumps(data))

if response.status_code == 200:

response_txt = response.text

data = json.loads(response_txt)

actual_response = data["response"]

conversation_history.append(actual_response)

return actual_response

else:

print("Error:", response.status_code, response.text)

iface = gr.Interface(

fn=generate_response,

inputs=["text"],

outputs=["text"]

)

if __name__ == "__main__":

iface.launch()

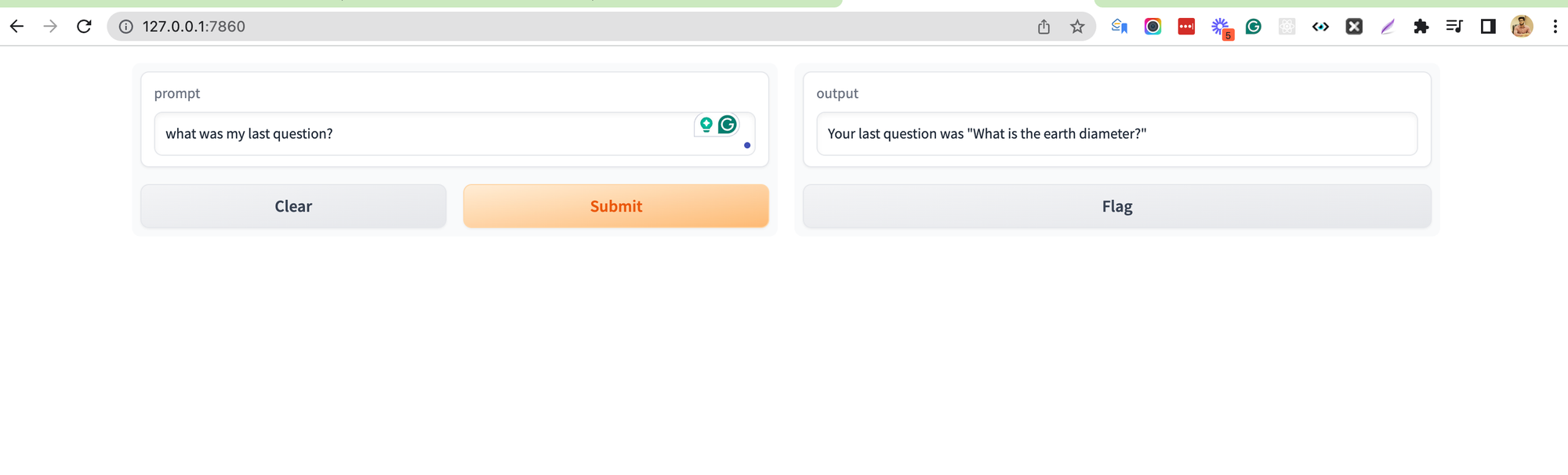

Output

So now our chatbot remembers history and sends history along with a new prompt

FAQs about ChatGPT Clones

What is a ChatGPT Clone?

The ChatGPT clone is a replica or imitation of the original ChatGPT model created by OpenAI. It uses similar language models and algorithms to generate human-like responses in a conversational format.

How can I build a ChatGPT Clone?

There are several ways to build a ChatGPT clone, one is to use Ollama open-source model, you can run Llama 2, Mistral , Gemma models locally and build chatbot application on top of it.

Can I customize the responses of my ChatGPT Clone?

Yes, you have the ability to tailor responses from your ChatGPT Clone. By customizing prompts and providing specific guidelines, you can shape the generated responses to meet your needs. For instance, if you require HTML markup in the response, you can structure your prompts accordingly. This flexibility allows for personalized interactions that align with your preferences and objectives.

How can I add styling to the frontend of my ChatGPT Clone?

To enhance the aesthetics of your ChatGPT Clone's frontend, leverage the capabilities of Gradio and Streamlit. Both platforms offer a range of templates and customization options to craft visually appealing user interfaces. Explore their diverse template libraries and harness their flexibility to design an interface that suits your preferences and engages your audience effectively.