AI Meets Style: Deep Learning-Powered Sunglass Color Customization

Customizing sunglasses has never been easier. Traditional methods require extensive photoshoots to capture every lens color and variation—a time-consuming and costly process. Our AI-powered application transforms this workflow. By leveraging deep learning and computer vision, a single image of a sunglass model can be used to generate a full spectrum of lens colors and shades. This eliminates the need for multiple photoshoots, streamlines product visualization, and offers an interactive way to explore and customize styles in real-time. With this innovative solution, showcasing product variety becomes seamless, efficient, and visually captivating, redefining the way sunglasses are presented and experienced.

From Manual Annotations to AI-Powered Precision: The Journey Behind the Solution

Building an automated solution for sunglass color customization wasn’t straightforward—it was a journey of trial, innovation, and transformation. Initially, the process involved manually annotating sunglass lenses by marking their coordinates. While effective for small-scale experiments, this approach quickly became tedious and inefficient. Each step, from detecting the lens regions to applying masks and manually changing colors, required significant time and effort. Scaling this process for numerous sunglass models was simply impractical.

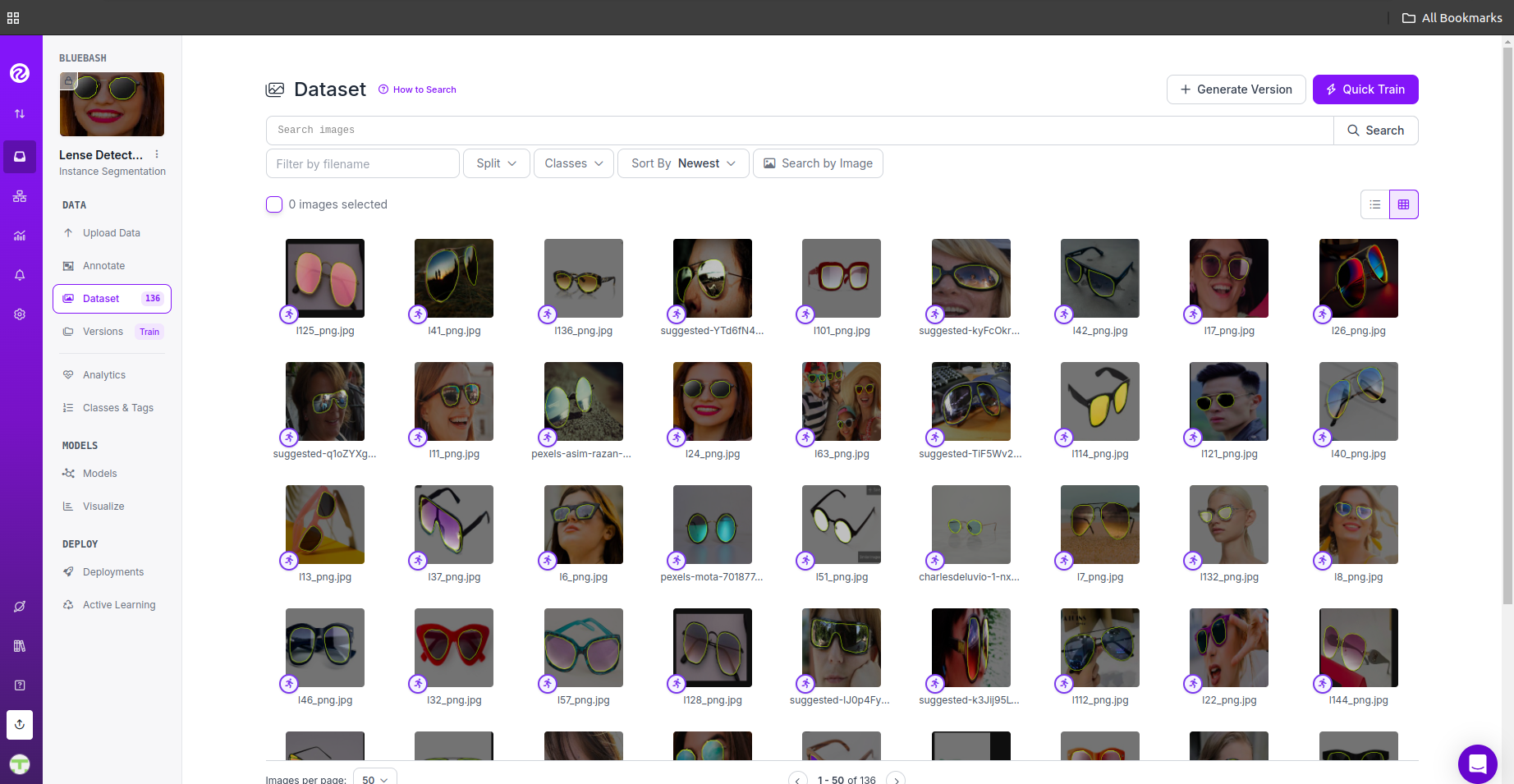

To overcome this, we shifted gears and focused on creating a robust, scalable solution using deep learning. The first step was to compile a dataset by manually annotating over 200 images of sunglasses, marking lens regions with precision. This dataset laid the foundation for training an advanced instance segmentation model. By leveraging cutting-edge deep learning techniques, the model learned to accurately detect and segment sunglass lenses in images, eliminating the need for manual annotation.

This breakthrough enabled the seamless application of masks and automated color transformations across a wide variety of sunglasses. The evolution from manual effort to AI-driven automation has not only streamlined the process but also unlocked new possibilities for scaling and efficiency, making the solution both practical and powerful.

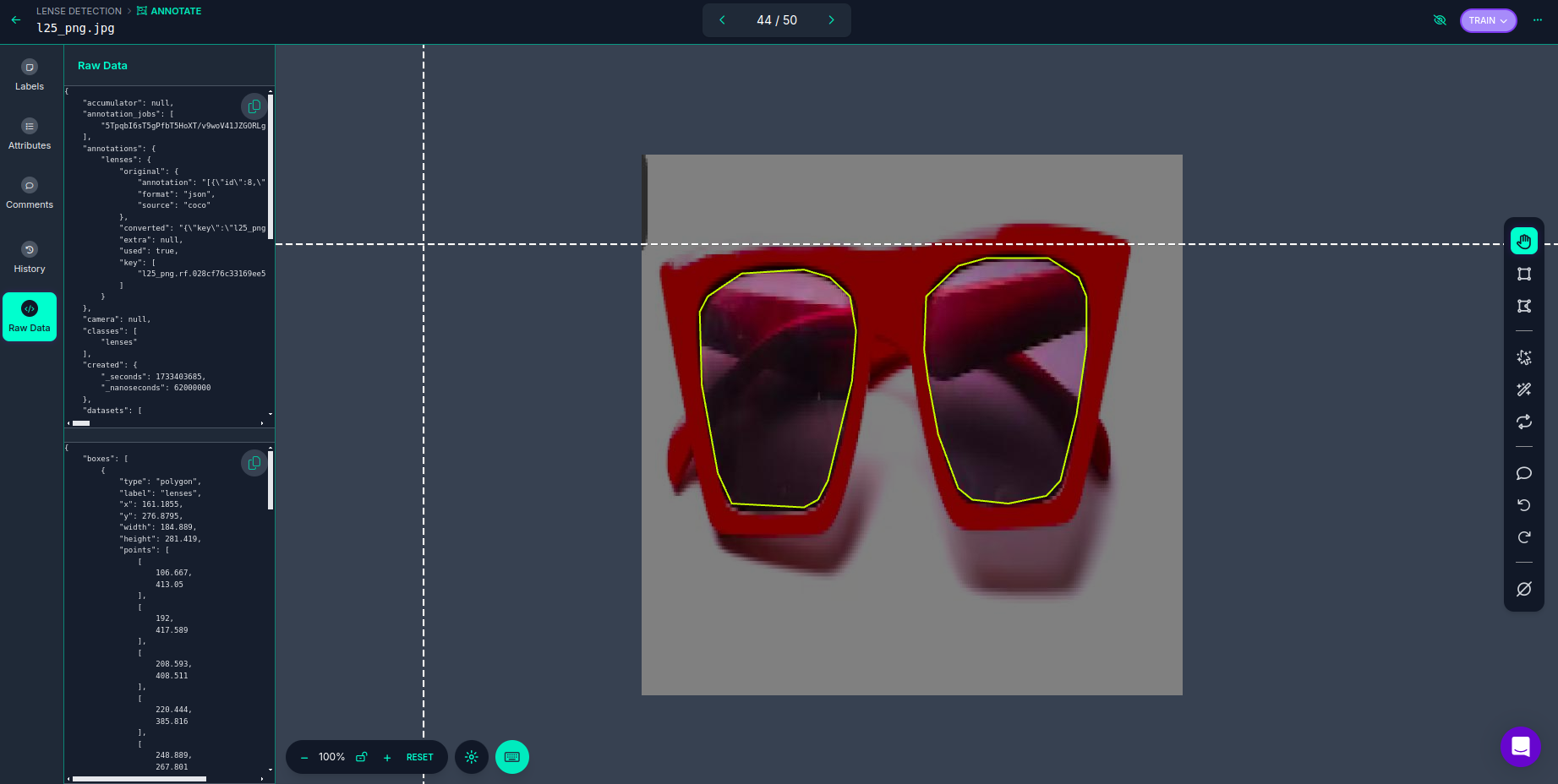

annotation = {"boxes":[{"type":"polygon","label":"lenses","x":161.1855,"y":276.8795,"width":184.889,"height":281.419,"points":[[106.667,413.05],[192,417.589],[208.593,408.511],[220.444,385.816],[248.889,267.801],[253.63,208.794],[246.519,167.943],[222.815,145.248],[192,136.17],[118.519,140.709],[78.222,167.943],[68.741,186.099],[71.111,272.34],[90.074,376.738],[106.667,413.05]],"keypoints":[]},{"type":"polygon","label":"lenses","x":430.222,"y":267.8015,"width":192,"height":290.497,"points":[[374.519,394.894],[391.111,408.511],[433.778,413.05],[478.815,403.972],[495.407,385.816],[514.37,308.652],[526.222,226.95],[526.222,167.943],[516.741,145.248],[481.185,122.553],[407.704,122.553],[374.519,131.631],[336.593,167.943],[334.222,231.489],[338.963,267.801],[350.815,331.348],[374.519,394.894]],"keypoints":[]}],"height":640,"key":"l25_png.rf.028cf76c33169ee533c04ff02bacb439.jpg","width":640}

This annotation represents a structured dataset entry used for training a deep learning model to detect sunglass lenses. The data is in JSON format and describes the geometric properties of two lens regions on a sunglasses image. Here's a breakdown:

Key Components of the Annotation:

boxes: This is an array containing two objects, each corresponding to one lens of the sunglasses. Each object includes the following details:type: Indicates the annotation type, here defined as"polygon", signifying that the lens shapes are annotated using polygons rather than bounding boxes.label: Specifies the object being annotated, which is"lenses".x,y,width,height: Represent the dimensions and position of the polygon in the image.points: Lists the coordinates of the vertices of the polygon, capturing the lens's precise shape. Each point is represented as[x, y]and outlines the boundary of the lenses.keypoints: This field is empty here but could be used for additional annotations, such as key features or landmarks.

heightandwidth: These describe the dimensions of the entire image, which is 640x640 pixels in this case.key: A unique identifier for the annotated image, linking the annotation to the corresponding image file (l25_png.rf.028cf76c33169ee533c04ff02bacb439.jpg).

The polygons in the points field precisely outline the contours of the sunglass lenses, offering an intricate and accurate representation of their shape. This detailed annotation empowers the deep learning model to not only locate the lenses in an image but also to understand their exact form and boundaries. Unlike basic bounding boxes, which only define rectangular areas, polygon annotations capture the true, often irregular, shapes of objects like sunglass lenses. This added precision provides the model with richer, more nuanced information, enabling it to better understand complex objects in real-world scenarios. By feeding such detailed annotations into an instance segmentation model, the system learns to recognize and segment the lenses with exceptional accuracy, even in varied and dynamic environments. This granular level of annotation is pivotal for training a model capable of high-precision automatically generating lens colour variants or applying different visual effects. Ultimately, the enhanced understanding of lens shapes ensures that the model performs with superior accuracy, making the customisation and detection of sunglasses lenses in new images seamless and highly reliable.

Technologies Used

To bring this innovative sunglass color customization solution to life, we leveraged several powerful technologies that streamline the development process and enhance the performance of the application:

- Roboflow: Roboflow is an essential tool for simplifying the creation, management, and deployment of computer vision models. It provided us with the ability to efficiently annotate our dataset and train our deep learning model for instance segmentation. Roboflow’s intuitive interface and seamless integration with other frameworks allowed us to accelerate model training and deployment.

- CV2 (OpenCV): OpenCV, or CV2, is a powerful library for computer vision tasks. In our application, it was instrumental in processing and manipulating images for lens detection, segmentation, and color transformation. Its extensive collection of image processing functions enabled us to apply precise visual effects and automate lens customization with ease.

- Streamlit: Streamlit is a versatile framework for building interactive web applications with Python. It enabled us to quickly develop a user-friendly interface where users can upload images, interactively change lens colors, and visualize the results in real time. Streamlit's ease of use and rapid development capabilities made it the perfect choice for creating a smooth and engaging front-end experience.

- Poetry: To manage our project’s dependencies and ensure a smooth development workflow, we used Poetry, a modern Python dependency management and packaging tool. Poetry helped us maintain a clean and reproducible environment, streamlining the installation of necessary libraries and simplifying the deployment of our application.

From Data Annotation to Deployment: Leveraging Roboflow for Instance Segmentation

Roboflow played a pivotal role in developing our custom solution for sunglasses lens color transformation. Here's how I utilized this powerful platform to bring the project to life:

First, I created a project on Roboflow specifically tailored for the instance segmentation task, which was essential for detecting and segmenting the lenses in sunglasses images. The next step involved collecting high-quality images of sunglasses, which I downloaded from various sources to ensure diverse and rich data for training. I then manually annotated over 200 images, carefully marking the lenses with polygons to provide the necessary detail for the model to accurately understand lens shapes.

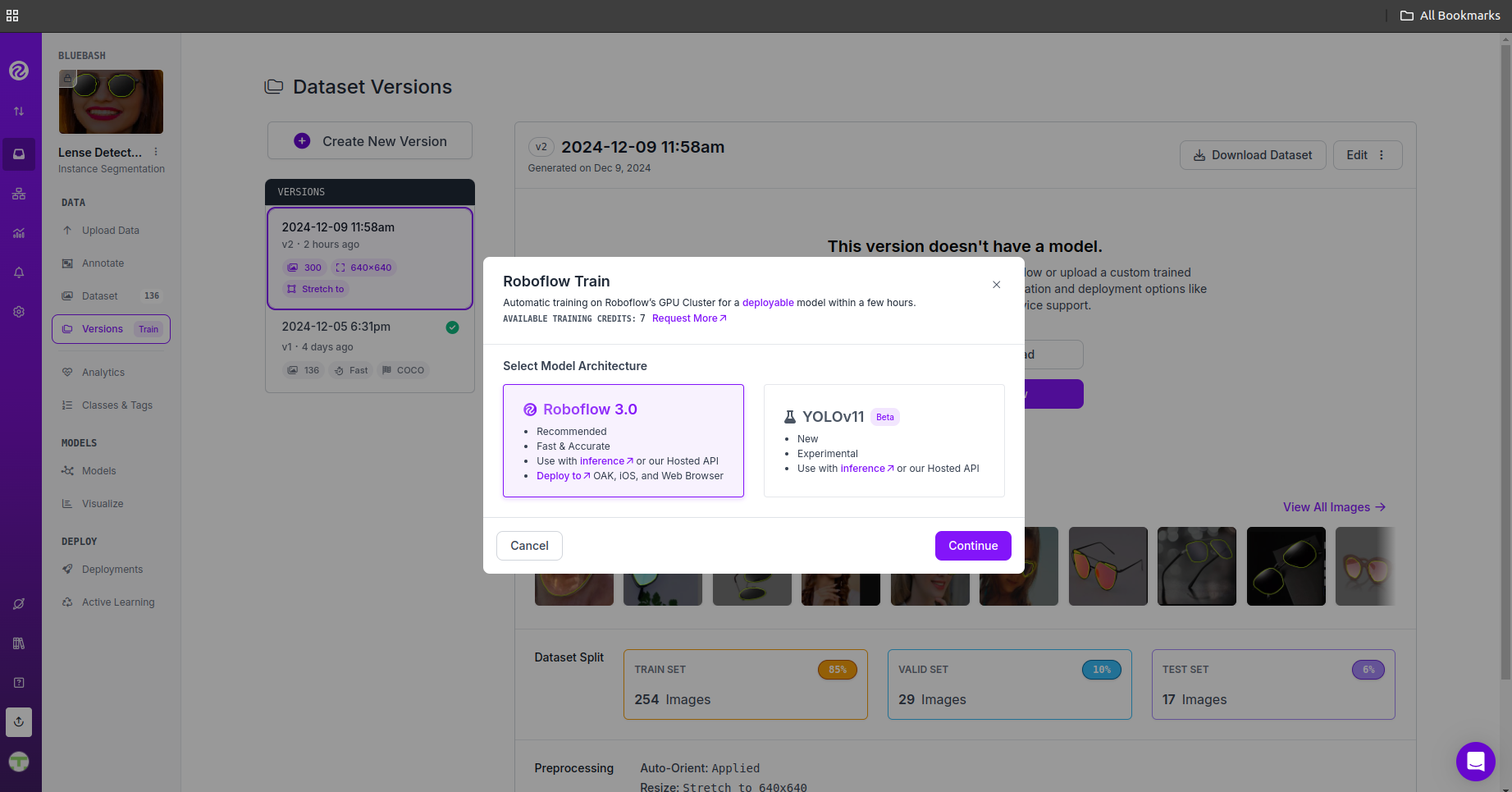

After gathering the annotated images, I processed the data to ensure it was ready for training. Roboflow 3.0's enhanced version allowed me to easily configure the dataset for deep learning tasks. The platform's intelligent model selection feature analyzed the data and automatically chose the most suitable deep learning model for instance segmentation. It likely selected a version of YOLO, a popular architecture for object detection, optimized for our task.

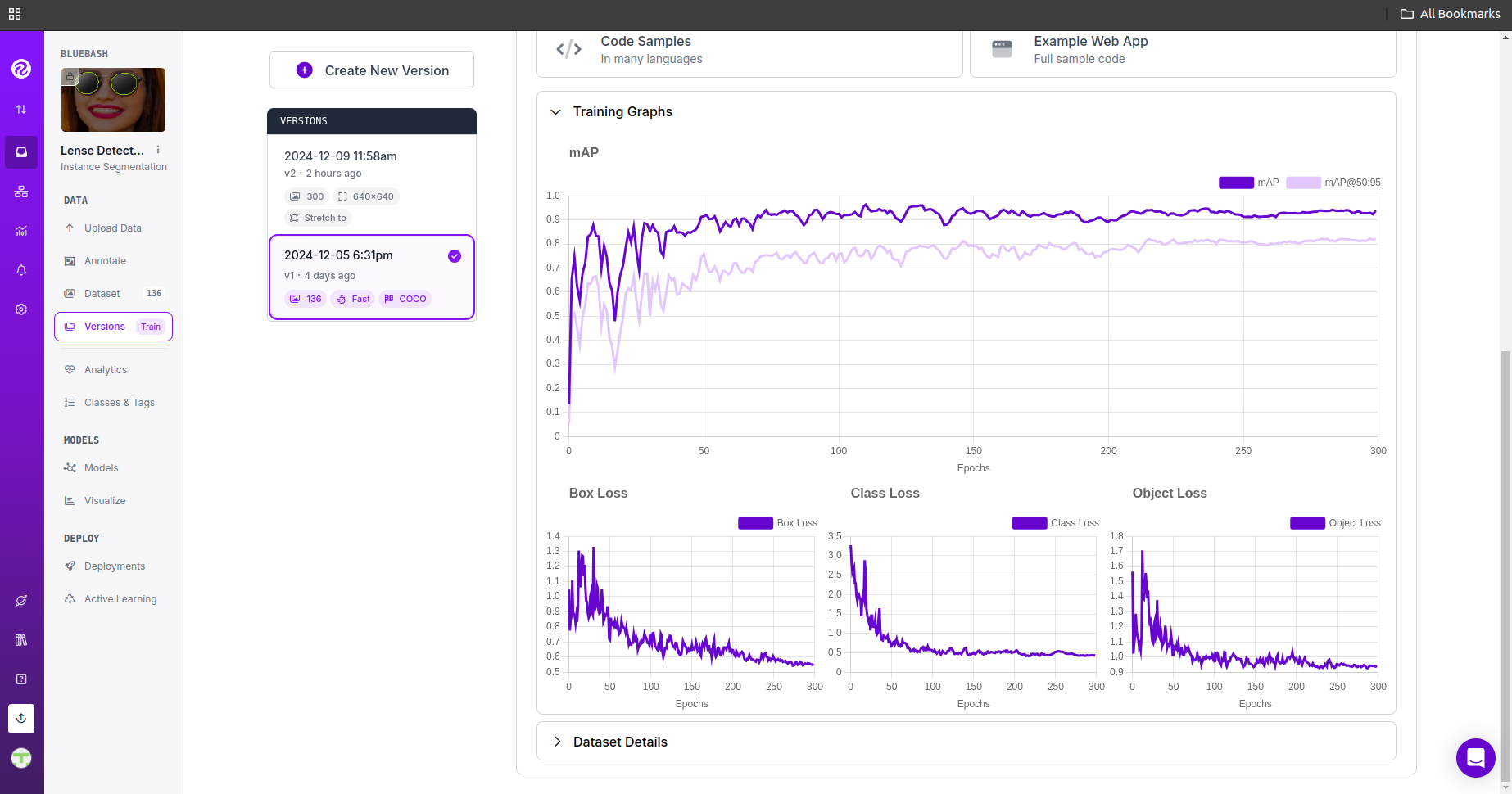

I then trained the model for over 200 epochs, a crucial step that ensured the model learned to recognize and segment the lenses with high accuracy. During the training process, Roboflow provided valuable insights by displaying performance graphs, which helped me track key metrics such as accuracy, loss, and potential overfitting. These graphs allowed me to closely monitor the model's progress and determine if it was reaching the desired accuracy or if adjustments were needed. By visualizing the model’s learning curve, I was able to ensure the model was training effectively, without overfitting, and was properly fine-tuned to meet the specific needs of our application. This detailed feedback further guided the training process, ensuring that the final model was both robust and reliable for lens segmentation tasks.

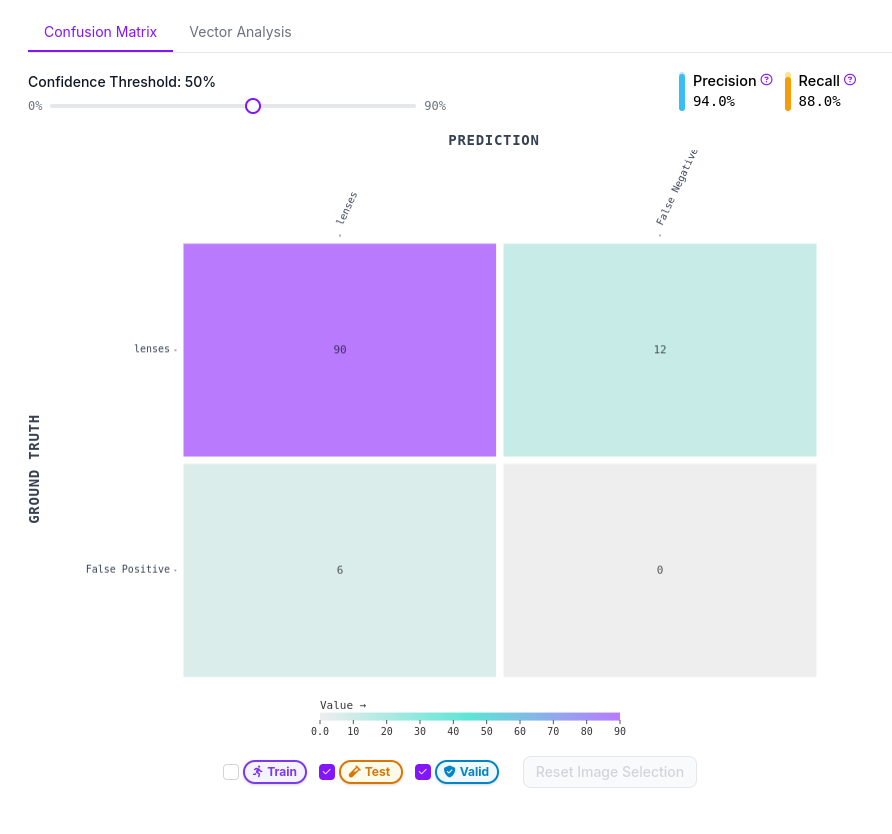

This confusion matrix provides valuable insights into the model's performance after training. It helps visualize how well the model is recognizing the sunglass lenses and how often it makes errors. Here's a breakdown of the confusion matrix:

- True Positives (90): These are the instances where the model correctly identified the lens.

- False Positives (6): These are the instances where the model mistakenly identified a non-lens region as a lens.

- False Negatives (12): These are the instances where the model failed to detect the lens, even though it was present in the image.

- True Negatives (0): The model did not predict any false negatives that were not lenses.

The precision of 94% indicates that when the model predicts a lens, it is correct 94% of the time, while the recall of 88% shows that it correctly identified 88% of the actual lenses in the dataset.

Overall, the model is performing well, with a high precision and a reasonably good recall, though there is room for improvement in reducing false positives and false negatives. The next steps could include refining the dataset or fine-tuning the model further to enhance these metrics.

Once the model was trained, I deployed it on Roboflow's cloud. By using the model ID, Roboflow key, and project name, I was able to seamlessly integrate the model into our application. This third-party connection allows our system to utilize the power of the trained model directly within the app, enabling real-time sunglasses lens customization for users.

CLIENT = InferenceHTTPClient(

api_url=ROBOFLOW_API_URL,

api_key=ROBOFLOW_API_KEY

)

result = CLIENT.infer(image_cv2, model_id=ROBOFLOW_TRAINED_MODEL)

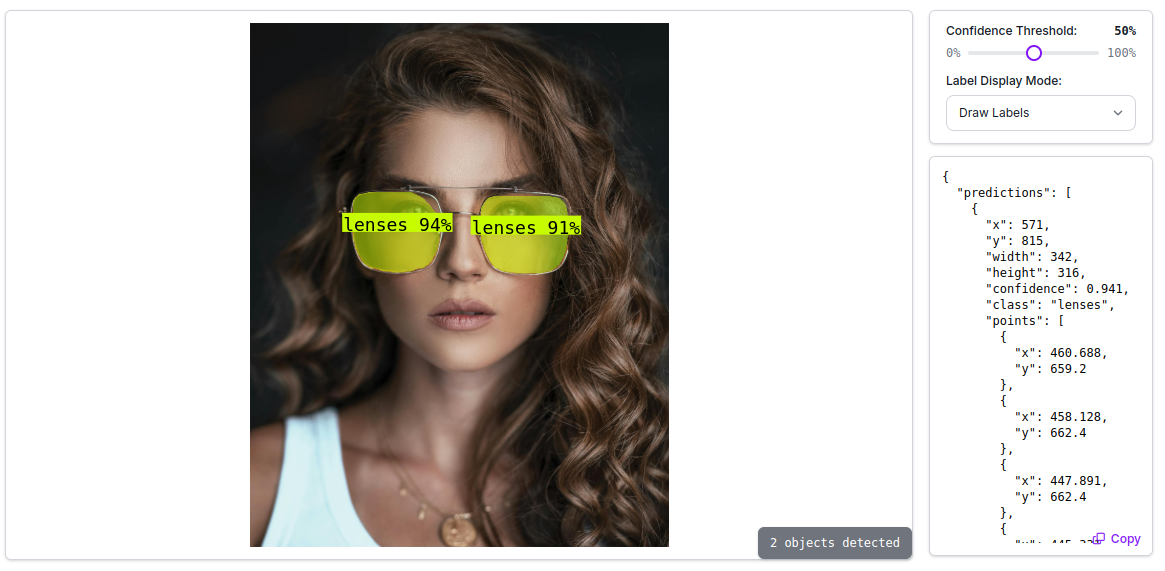

Take a look at the example of sunglasses detection:

- The image shows a woman wearing sunglasses, and Roboflow has detected the lenses with a high confidence level (94% and 91%).

- It returns bounding box coordinates, class labels (lenses), and the points where the lenses are located in the image. With this information, you can perform operations like highlighting the lenses, changing their color, or applying filters.

Thanks to Roboflow's user-friendly interface and powerful machine learning capabilities, we were able to quickly build and deploy an accurate instance segmentation model that serves as the backbone of our sunglass color transformation tool.

Transforming Sunglasses with AI: Color Customization with Masking and Predictions

In this part of the project, we take the predictions made by the RoboFlow model and use them to customize the colors of sunglasses in an image. With the help of computer vision techniques and the RoboFlow API, we automatically detect the lens areas in an image and apply different shades from the market to create visually stunning results.

Step-by-Step Breakdown: Customizing Sunglass Colors

Here’s how we made it happen:

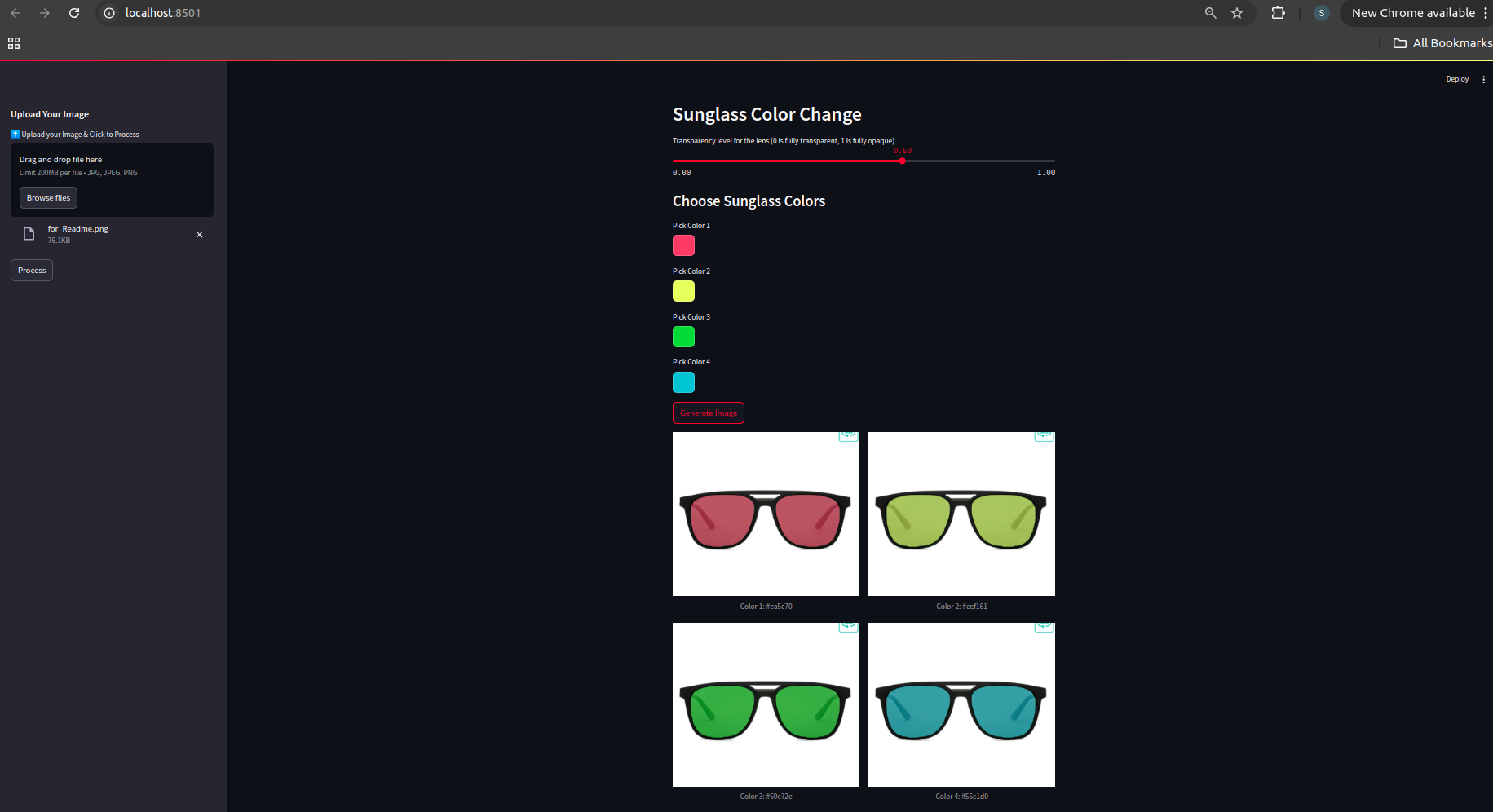

- Image Upload and Processing:

- Users upload their image through Streamlit, and we use the RoboFlow model to predict the areas in the image corresponding to the sunglasses lenses.

- The predictions, such as points outlining the lens, are extracted and filtered for high-confidence results (over 90%).

- Apply Color Masks:

- With the detected lens areas, we apply color masks from a palette of sunglasses shades.

- The code uses OpenCV to create a mask around the predicted sunglasses lenses and blends the chosen color with the original image.

- Customization Options:

- Users can select the transparency level of the lens color (from fully transparent to fully opaque) via a simple slider.

- Users can pick from a variety of colors to apply to the lenses using Streamlit’s color picker, giving them a fully customized experience.

- Result Generation:

- After selecting the color and transparency, users can click on "Generate Image" to see the final result, where the sunglasses lenses are updated with their chosen shade.

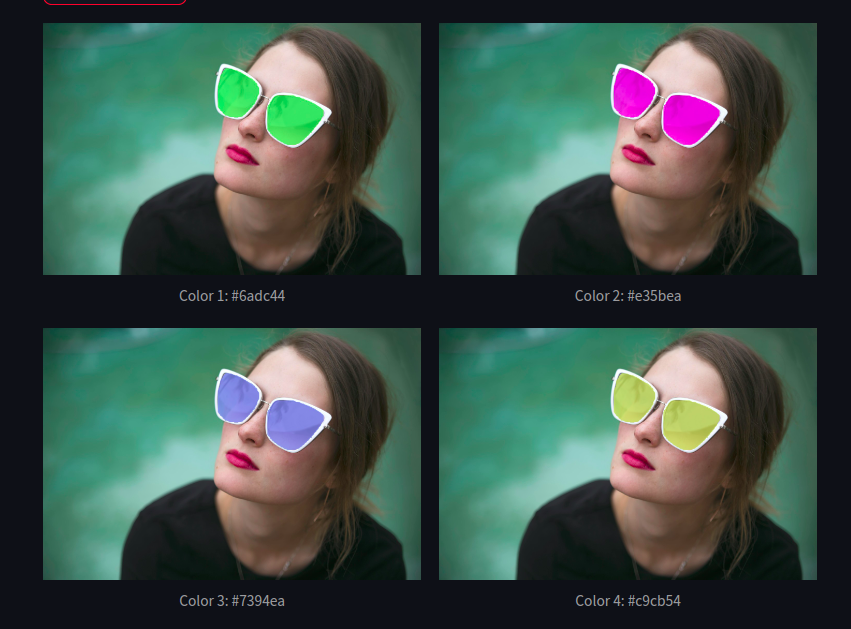

We have tested the model on numerous other images, as shown below:

The image you uploaded showcases four different color variations applied to a pair of sunglasses worn by the same model. Each version is distinct, demonstrating how different color filters can dramatically change the appearance of the sunglasses. These variations demonstrate how color impacts the overall aesthetic and perception of the same product. You can explore the versatility of the sunglasses and how each color can appeal to different tastes and preferences.

For the complete codebase, check out our GitHub repository! Dive in and explore the full potential of Sunglasses Shade Changer!

https://github.com/BlueBash/Sunglass-Shade-Changer

FAQ

1. What is Roboflow?

- Roboflow is a platform for creating, labeling, and managing computer vision datasets. It supports image classification, object detection, and segmentation tasks with tools for data augmentation.

2. What is the difference between instance segmentation and semantic segmentation?

- Instance segmentation detects object boundaries at the pixel level and differentiates between objects of the same class. Semantic segmentation labels pixels by object category without distinguishing individual instances.

3. How does YOLO work for object detection?

- YOLO (You Only Look Once) detects objects in real-time by dividing images into grids and predicting bounding boxes and class probabilities in a single network pass.

4. How do I generate a custom dataset for object detection in Roboflow?

- Upload your images to Roboflow, annotate them using tools for bounding boxes or segmentation, and export the dataset in formats compatible with popular frameworks like YOLO or TensorFlow.

5. What is image masking used for in computer vision?

- Image masking isolates specific regions of interest by highlighting target areas, commonly used in tasks like segmentation, object recognition, and background removal.

6. How can OpenCV be used for object detection and segmentation?

- OpenCV provides tools for object detection with methods like YOLO or Haar cascades and segmentation through techniques such as thresholding and contour detection.

7. What is Mask R-CNN for instance segmentation?

- Mask R-CNN is a deep learning model for instance segmentation that predicts both object masks and bounding boxes, extending Faster R-CNN by adding a mask prediction branch.

8. How do I export a dataset from Roboflow for YOLO?

- Annotate images in Roboflow, then export them in the YOLO format with class labels and bounding box coordinates, ready for YOLO model training.

9. Why is YOLO faster than other object detection models like Faster R-CNN?

- YOLO is faster because it performs object detection in a single pass through the network, unlike Faster R-CNN, which uses a multi-stage approach.

10. How do I create a custom instance segmentation model using YOLO and Roboflow?

- Upload and annotate your dataset in Roboflow, train a YOLO-based model with a segmentation head, and deploy it for instance segmentation tasks.